Appendix¶

pseudocode algorithms¶

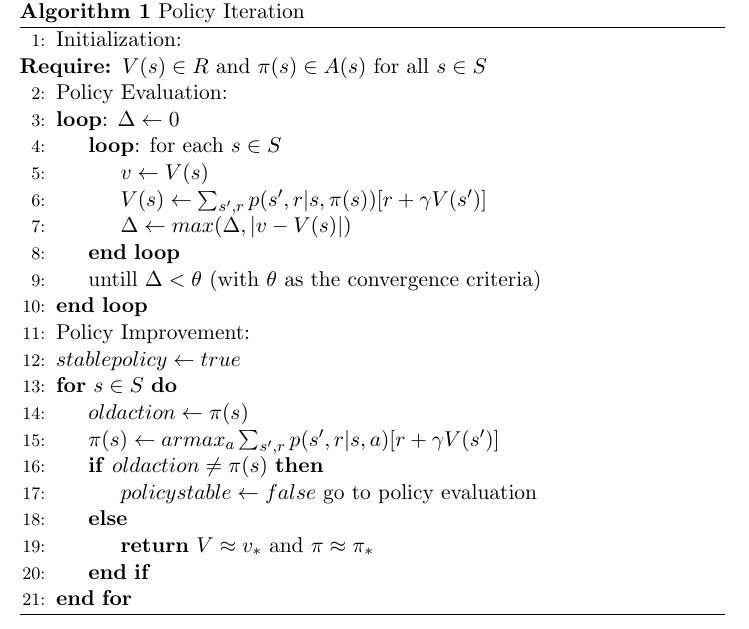

Fig. 12 algorithm for the policy iteration¶

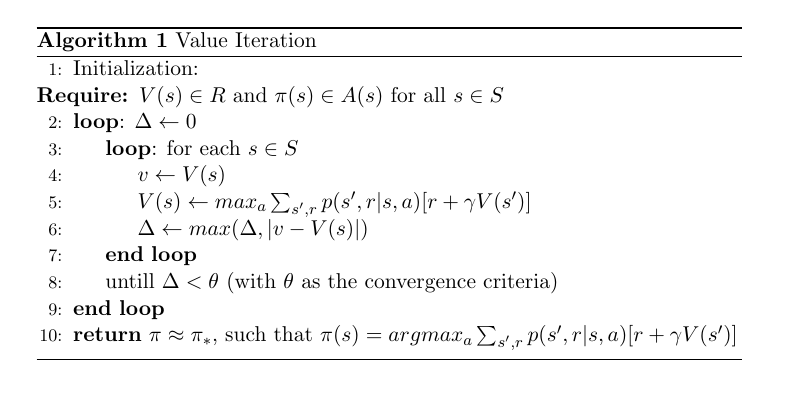

Fig. 13 algorithm for the value iteration¶

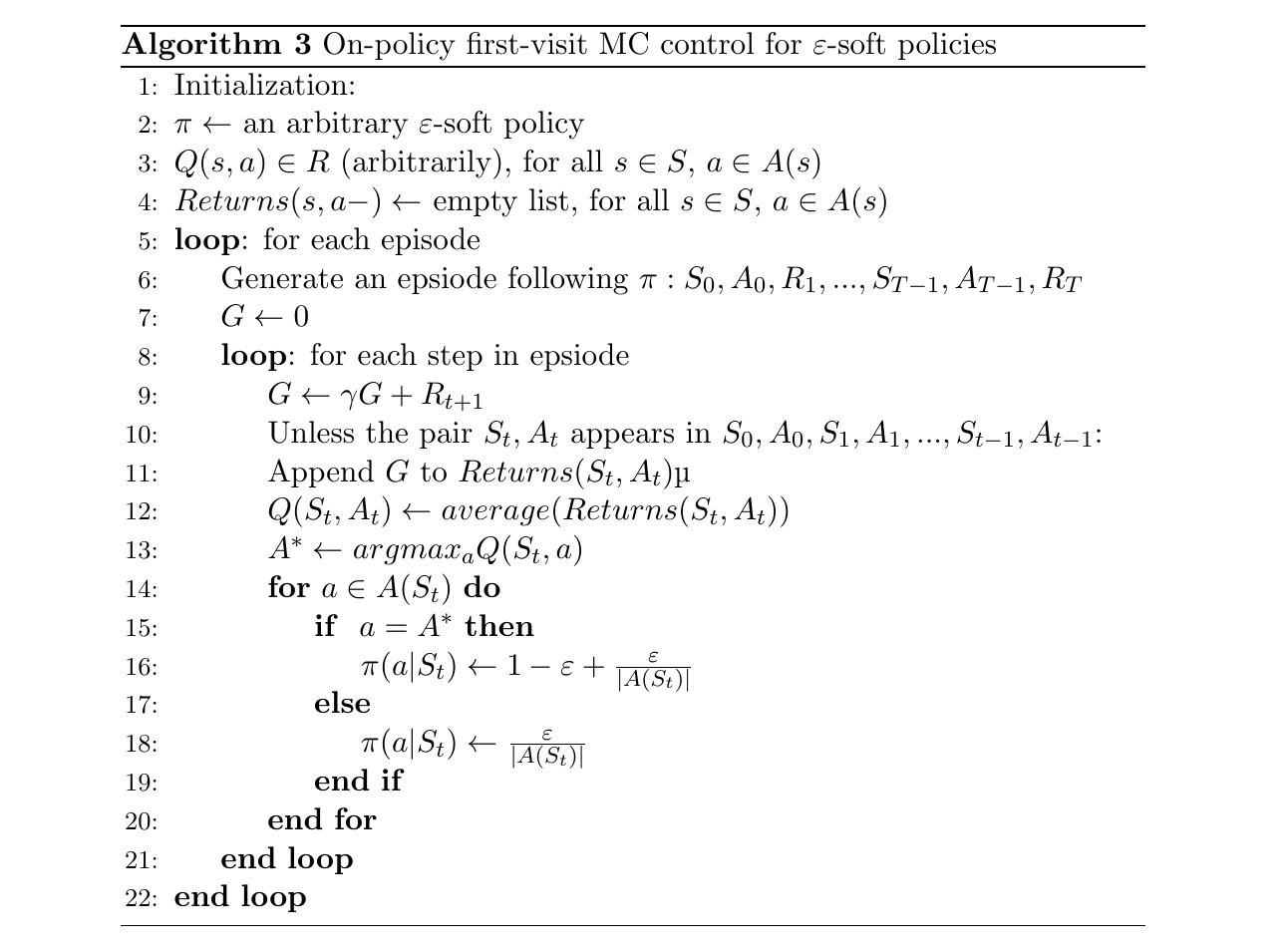

Fig. 14 algorithm for MC method on-policy¶

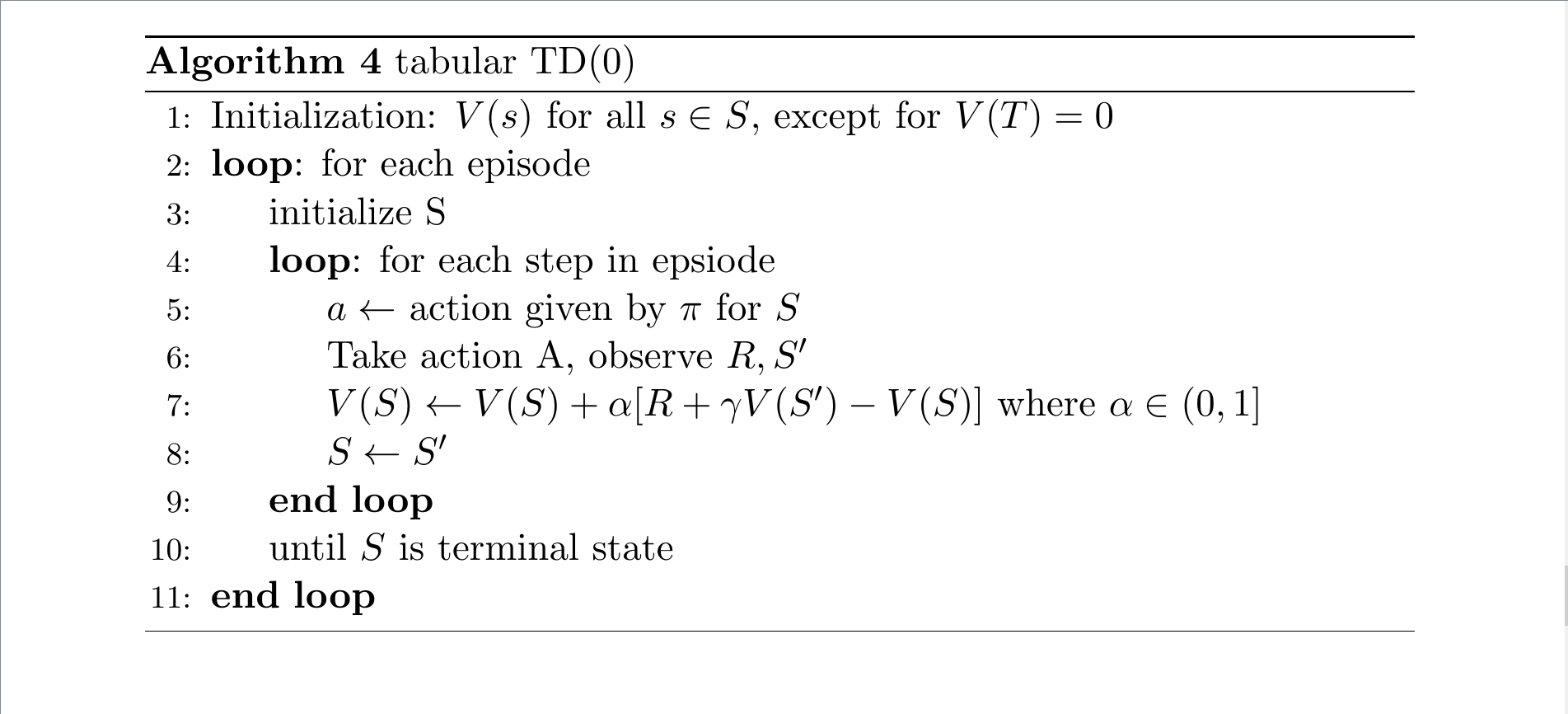

Fig. 15 algorithm for TD(0)¶

- ADBB17

Kai Arulkumaran, Marc Peter Deisenroth, Miles Brundage, and Anil Anthony Bharath. A brief survey of deep reinforcement learning. arXiv preprint arXiv:1708.05866, 2017.

- AKAM+21

Ahmad Taher Azar, Anis Koubaa, Nada Ali Mohamed, Habiba A Ibrahim, Zahra Fathy Ibrahim, Muhammad Kazim, Adel Ammar, Bilel Benjdira, Alaa M Khamis, Ibrahim A Hameed, and others. Drone deep reinforcement learning: a review. Electronics, 10(9):999, 2021.

- BI99

Leemon C Baird III. Reinforcement learning through gradient descent. Technical Report, CARNEGIE-MELLON UNIV PITTSBURGH PA DEPT OF COMPUTER SCIENCE, 1999.

- BHB+20

André Barreto, Shaobo Hou, Diana Borsa, David Silver, and Doina Precup. Fast reinforcement learning with generalized policy updates. Proceedings of the National Academy of Sciences, 117(48):30079–30087, 2020.

- BFH17

Qianwen Bi, Michael Finke, and Sandra J Huston. Financial software use and retirement savings. Journal of Financial Counseling and Planning, 28(1):107–128, 2017.

- BDTS20

Rachel Qianwen Bi, Lukas R Dean, Jingpeng Tang, and Hyrum L Smith. Limitations of retirement planning software: examining variance between inputs and outputs. Journal of Financial Service Professionals, 2020.

- Blo18

Daniel Alexandre Bloch. Machine learning: models and algorithms. Machine Learning: Models And Algorithms, Quantitative Analytics, 2018.

- BS11

Kenneth Bruhn and Mogens Steffensen. Household consumption, investment and life insurance. Insurance: Mathematics and Economics, 48(3):315–325, 2011.

- CL20

Shou Chen and Guangbing Li. Time-inconsistent preferences, consumption, investment and life insurance decisions. Applied Economics Letters, 27(5):392–399, 2020.

- DPMSNR14

Albert De-Paz, Jesus Marin-Solano, Jorge Navas, and Oriol Roch. Consumption, investment and life insurance strategies with heterogeneous discounting. Insurance: Mathematics and Economics, 54:66–75, 2014.

- DH20

Matthew Dixon and Igor Halperin. G-learner and girl: goal based wealth management with reinforcement learning. arXiv preprint arXiv:2002.10990, 2020.

- DHB20

Matthew F Dixon, Igor Halperin, and Paul Bilokon. Machine Learning in Finance: From Theory to Practice. Springer International Publishing AG, Cham, 2020. ISBN 9783030410674.

- Dol10

Victor Dolk. Survey reinforcement learning. Eindhoven University of Technology, 2010.

- DMBE18

Taft Dorman, Barry S Mulholland, Qianwen Bi, and Harold Evensky. The efficacy of publicly-available retirement planning tools. Available at SSRN 2732927, 2018.

- EWC21

Maria K Eckstein, Linda Wilbrecht, and Anne GE Collins. What do reinforcement learning models measure? interpreting model parameters in cognition and neuroscience. Current Opinion in Behavioral Sciences, 41:128–137, 2021.

- FPT15

Roy Fox, Ari Pakman, and Naftali Tishby. Taming the noise in reinforcement learning via soft updates. arXiv preprint arXiv:1512.08562, 2015.

- FranccoisLHI+18

Vincent François-Lavet, Peter Henderson, Riashat Islam, Marc G Bellemare, and Joelle Pineau. An introduction to deep reinforcement learning. arXiv preprint arXiv:1811.12560, 2018.

- GP13

Matthieu Geist and Olivier Pietquin. Algorithmic survey of parametric value function approximation. IEEE Transactions on Neural Networks and Learning Systems, 24(6):845–867, 2013.

- GulerLP19

Batuhan Güler, Alexis Laignelet, and Panos Parpas. Towards robust and stable deep learning algorithms for forward backward stochastic differential equations. arXiv preprint arXiv:1910.11623, 2019.

- Ham18

Ahmad Hammoudeh. A concise introduction to reinforcement learning. 2018.

- HJ+20

Jiequn Han, Arnulf Jentzen, and others. Algorithms for solving high dimensional pdes: from nonlinear monte carlo to machine learning. arXiv preprint arXiv:2008.13333, 2020.

- HJW17

Jiequn Han, Arnulf Jentzen, and E Weinan. Overcoming the curse of dimensionality: solving high-dimensional partial differential equations using deep learning. arXiv preprint arXiv:1707.02568, pages 1–13, 2017.

- HJW18

Jiequn Han, Arnulf Jentzen, and E Weinan. Solving high-dimensional partial differential equations using deep learning. Proceedings of the National Academy of Sciences, 115(34):8505–8510, 2018.

- Her11

Hal E Hershfield. Future self-continuity: how conceptions of the future self transform intertemporal choice. Annals of the New York Academy of Sciences, 1235:30, 2011.

- KSL19

Sung-Kyun Kim, Oren Salzman, and Maxim Likhachev. Pomhdp: search-based belief space planning using multiple heuristics. In Proceedings of the International Conference on Automated Planning and Scheduling, volume 29, 734–744. 2019.

- KC21

Yeo Jin Kim and Min Chi. Time-aware q-networks: resolving temporal irregularity for deep reinforcement learning. arXiv preprint arXiv:2105.02580, 2021.

- KS15

Morten Tolver Kronborg and Mogens Steffensen. Optimal consumption, investment and life insurance with surrender option guarantee. Scandinavian Actuarial Journal, 2015(1):59–87, 2015.

- Leu94

Siu Fai Leung. Uncertain lifetime, the theory of the consumer, and the life cycle hypothesis. 1994.

- Lev18

Sergey Levine. Reinforcement learning and control as probabilistic inference: tutorial and review. arXiv preprint arXiv:1805.00909, 2018.

- MVHS14

Ashique Rupam Mahmood, Hado Van Hasselt, and Richard S Sutton. Weighted importance sampling for off-policy learning with linear function approximation. In NIPS, 3014–3022. 2014.

- Mer69

Robert C Merton. Lifetime portfolio selection under uncertainty: the continuous-time case. The review of Economics and Statistics, pages 247–257, 1969.

- Mer75

Robert C Merton. Optimum consumption and portfolio rules in a continuous-time model. In Stochastic Optimization Models in Finance, pages 621–661. Elsevier, 1975.

- MBJ20a

Thomas M Moerland, Joost Broekens, and Catholijn M Jonker. A framework for reinforcement learning and planning. arXiv preprint arXiv:2006.15009, 2020.

- MBJ20b

Thomas M Moerland, Joost Broekens, and Catholijn M Jonker. Model-based reinforcement learning: a survey. arXiv preprint arXiv:2006.16712, 2020.

- MJ20

Amit Kumar Mondal and N Jamali. A survey of reinforcement learning techniques: strategies, recent development, and future directions. arXiv preprint arXiv:2001.06921, 2020.

- NRC20

Muddasar Naeem, S Tahir H Rizvi, and Antonio Coronato. A gentle introduction to reinforcement learning and its application in different fields. IEEE Access, 2020.

- NZKN19

Farzad Niroui, Kaicheng Zhang, Zendai Kashino, and Goldie Nejat. Deep reinforcement learning robot for search and rescue applications: exploration in unknown cluttered environments. IEEE Robotics and Automation Letters, 4(2):610–617, 2019. doi:10.1109/LRA.2019.2891991.

- PRD96

Elena Pashenkova, Irina Rish, and Rina Dechter. Value iteration and policy iteration algorithms for markov decision problem. In AAAI’96: Workshop on Structural Issues in Planning and Temporal Reasoning. Citeseer, 1996.

- PVW11

James M Poterba, Steven F Venti, and David A Wise. Were they prepared for retirement? financial status at advanced ages in the hrs and ahead cohorts. In Investigations in the Economics of Aging, pages 21–69. University of Chicago Press, 2011.

- Rai18

Maziar Raissi. Forward-backward stochastic neural networks: deep learning of high-dimensional partial differential equations. arXiv preprint arXiv:1804.07010, 2018.

- Ric75

Scott F Richard. Optimal consumption, portfolio and life insurance rules for an uncertain lived individual in a continuous time model. Journal of Financial Economics, 2(2):187–203, 1975.

- RMM18

Lev Rozonoer, Boris Mirkin, and Ilya Muchnik. Braverman readings in machine learning. In Key Ideas from Inception to Current State: International Conference Commemorating the 40th Anniversary of Emmanuil Braverman's Decease, Boston, MA Invited Talks. Cham: Springer International Publishing. Springer, 2018.

- San21

Nimish Sanghi. Deep Reinforcement Learning with Python: With Pytorch, TensorFlow and OpenAI Gym. Apress L. P, Berkeley, CA, 2021. ISBN 1484268083.

- SW16

Yang Shen and Jiaqin Wei. Optimal investment-consumption-insurance with random parameters. Scandinavian Actuarial Journal, 2016(1):37–62, 2016.

- SBLL19

Joohyun Shin, Thomas A Badgwell, Kuang-Hung Liu, and Jay H Lee. Reinforcement learning–overview of recent progress and implications for process control. Computers & Chemical Engineering, 127:282–294, 2019.

- SB18

Richard S Sutton and Andrew G Barto. Reinforcement learning: An introduction. MIT press, 2018.

- VOW12

Martijn Van Otterlo and Marco Wiering. Reinforcement learning and markov decision processes. In Reinforcement learning, pages 3–42. Springer, 2012.

- WZL09

Fei-Yue Wang, Huaguang Zhang, and Derong Liu. Adaptive dynamic programming: an introduction. IEEE computational intelligence magazine, 4(2):39–47, 2009.

- WZZ19

Haoran Wang, Thaleia Zariphopoulou, and Xun Yu Zhou. Exploration versus exploitation in reinforcement learning: a stochastic control approach. Available at SSRN 3316387, 2019.

- WCJW20

Jiaqin Wei, Xiang Cheng, Zhuo Jin, and Hao Wang. Optimal consumption–investment and life-insurance purchase strategy for couples with correlated lifetimes. Insurance: Mathematics and Economics, 91:244–256, 2020.

- WHJ17

E Weinan, Jiequn Han, and Arnulf Jentzen. Deep learning-based numerical methods for high-dimensional parabolic partial differential equations and backward stochastic differential equations. Communications in Mathematics and Statistics, 5(4):349–380, 2017.

- Yaa65

Menahem E Yaari. Uncertain lifetime, life insurance, and the theory of the consumer. The Review of Economic Studies, 32(2):137–150, 1965.

- YLL+19

Niko Yasui, Sungsu Lim, Cam Linke, Adam White, and Martha White. An empirical and conceptual categorization of value-based exploration methods. ICML Exploration in Reinforcement Learning Workshop, 2019.

- Ye06

Jinchun Ye. Optimal life insurance purchase, consumption and portfolio under an uncertain life. University of Illinois at Chicago, 2006.